Are ChatGPT, Google Bart, and Bing Search AI using GPT-4 ready for prime time?

Not by a long shot. The hype has gotten both companies and the average person excited to a point of delirium. ChatGPT and GPT-3 are experiments. Depending on your perspective, they are successful NLP (Natural Language Processing) AI chatbots that can structure text in a very human-like way. With huge computing power and access to unlimited information, they’re capable of generating information on almost any subject. Where it falls short is what it is saying. Let me explain…

To train any AI, you need a lot of data. ChatGPT used the internet as its data source. It took four years of crunching data, adjusting the machine learning algorithms, and crunching the data again. Some of the data sources used to train the AI are more accurate than others. For example, both Wikipedia and Reddit were used to train the AI. While Wikipedia is an online encyclopedia written by a community of volunteers, the information is moderated and more or less accurate. In comparison, Reddit is a site comprised of many online sub-forums for hobbies and interests, and most interactions are purely conversational in nature. This means the information isn’t verified or likely to be accurate.

The end result? Yes, the bot’s conversational abilities are very natural. However, because ChatGPT does not have the ability to tell right from wrong, nor does it possess common sense or critical thinking, it will provide incorrect answers to questions. When the data source is corrupted, the answers generated will also be corrupted.

Many also wonder how easy it would be to spread disinformation from a global standpoint. Consider this: if a country started posting millions of posts saying tree roots are above ground and branches are below ground, then that is what the AI will learn and pass on to naive users. Teachers might suddenly be inundated with kids who use ChatGPT for homework help asking for confirmation on what ChatGPT said. And what happens when those kids grow up to be teachers?

Although the question seems silly (obviously we can go outside and dispute this claim), think of the popular idea that human blood is actually blue and only turns red when exposed to oxygen. Despite this being debunked many times, many of today’s adults grew up with well-meaning teachers passing along this “fun fact” in classrooms. Many adults today still staunchly believe human blood is blue. What happens to today’s students when ChatGPT starts making significant waves in education?

Now let’s go back to thinking globally. There is decent evidence (and even claims from the U.S. government) that suggests foreign governments participated in spreading disinformation to the American public via social media during election periods to promote civil unrest and division between multiple political parties.

ChatGPT learns via the internet and interactions with users, so what happens when foreign parties decide to target a specific country or language and flood it with misinformation? The effects on a population heavily reliant on ChatGPT could be potentially disastrous.

I’m not the only one who thinks so. Regulators in China have already told top tech companies to ban ChatGPT and other services powered by it in fear that it might spread American propaganda.

Another concern with AI is its sourcing abilities. Currently, ChatGPT is capable of providing sources for some of its statements. It might link to studies or web pages. However, if the bot cannot provide a source for its claims, it will often give a canned response (see below)

True AI to engage customers for eCommerce, business leads, and customer support

-

5% to 35% Increase in AOV*

-

20% to 40% Increase in Revenue*

-

25% to 45% Reduction in Tickets with a Customer Service Chatbot

We Guarantee Results... Or Work For Free!

*When shoppers engage with Ochatbot®

Similar to Ochatbot, ChatGPT uses human-in-the-loop to refine answers and make the AI smarter. Some of the incorrect answers ChatGPT gave when it was released are now corrected through user feedback. When given a wrong answer, the user can correct it conversationally. Sometimes it will self-correct and try again. If that doesn’t work, you can click the thumbs-down option on its response to send feedback to the developers.

Another big use case is using it like a search engine since its data source is the internet. This is a huge shift in the search engine market if it is successful. It brings up a number of new changes in how companies will handle search engine optimization (SEO).

For example, if the bot scans your site and shows the user a text answer, the user never has to visit your website. This will drop organic traffic to a site. This means you will need to pay for advertising to get noticed. Seems like a good investment for Google and Bing. In fact, Google has already been working towards this “answer engine” model with its feature of the “zero position,” which is an optimized excerpt placed above the natural top ten results that aim to answer questions quickly and efficiently. Companies that are naturally positioned on the first page are already seeing a decrease in click rates through their sites as most internet users are often satisfied with the answers provided in the zero position.

This model currently functions off of something Google calls the “knowledge graph,” with the introduction of ChatGPT for search engines, we are looking at a massive shift in SEO and advertising.

Don’t get me wrong this is a huge leap in where AI is today, and hopefully, we can come up with solutions to fix the problems.

So What is ChatGPT Good for?

The best use for ChatGPT is to help create ideas; from writing a story or an article to suggestions on bugs in code. Many writers are now using ChatGPT as well as many other programs (Lex | Jasper | CopyAI | Writesonic | Moonbeam | Sudowrite | Writer |Copylime).

Although many worry that utilizing ChatGPT for writing is plagiarism, ChatGPT is capable of more than just writing ideas. When used correctly, ChatGPT is a great collaborator. It can provide you with outlines, give recommendations for readings and offer constructive criticism on your writing. The next time you write something, consider asking ChatGPT, “What constructive feedback can you give me about this writing?

Its ability to source a huge amount of data quickly is also amazing. This technology can be used in a number of fields with a lot of data such as medicine, astronomy, and many more.

What’s Next?

This is an exciting time for those of us in the AI field. Having a tool that can read the internet and present an answer in a perfectly conversational tone is amazing. The bigger problem is still the same issue that has been plaguing AI for years: how can we create intelligent AI and machine learning without tediously crunching data and analyzing massive sources? I suspect the solution to this approach will not be the common machine learning approach of weighting words or images and running and re-running the algorithm over and over till the machine can tell the difference between a “1” and a “7” with messy handwriting.

Beyond the method used to train the AI, rampant disinformation and the bot’s inability to remember conversations and past questions dilutes its “intelligence.” It is a powerful program, but not quite the savior it is being touted as. Plus, as I said, the bot lacks common sense, though that may never get solved. I know many people with years of “life training” as an adult that have very low common sense.

Further Reading

ChatGPT explains its learning model methods:

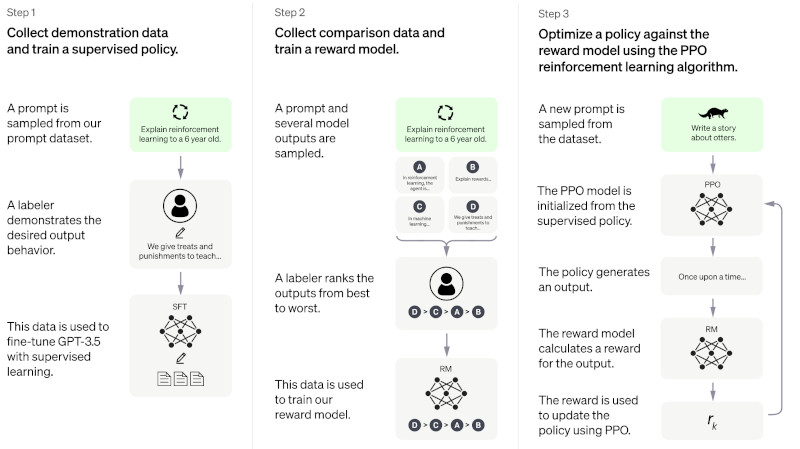

We trained this model using Reinforcement Learning from Human Feedback (RLHF), using the same methods as InstructGPT, but with slight differences in the data collection setup. We trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. We gave the trainers access to model-written suggestions to help them compose their responses. We mixed this new dialogue dataset with the InstructGPT dataset, which we transformed into a dialogue format.

To create a reward model for reinforcement learning, we needed to collect comparison data, which consisted of two or more model responses ranked by quality. To collect this data, we took conversations that AI trainers had with the chatbot. We randomly selected a model-written message, sampled several alternative completions, and had AI trainers rank them. Using these reward models, we can fine-tune the model using Proximal Policy Optimization. We performed several iterations of this process.

ChatGPT is fine-tuned from a model in the GPT-3.5 series, which finished training in early 2022. You can learn more about the 3.5 series here. ChatGPT and GPT 3.5 were trained on an Azure AI supercomputing infrastructure.

Limitations

ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging, as: (1) during RL training, there’s currently no source of truth; (2) training the model to be more cautious causes it to decline questions that it can answer correctly; and (3) supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows.

ChatGPT is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly.

The model is often excessively verbose and overuses certain phrases, such as restating that it’s a language model trained by OpenAI. These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimization issues.12

Ideally, the model would ask clarifying questions when the user provided an ambiguous query. Instead, our current models usually guess what the user intended.

While we’ve made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behavior. We’re using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now. We’re eager to collect user feedback to aid our ongoing work to improve this system.

What does ChatGPT say?

I asked ChatGPT some questions and its responses were pretty accurate. Below are the results.

Question: what do you not know

Answer: As an AI language model, there are countless pieces of information that I do not know. Despite being trained on a vast dataset of text, there are always new topics, fields, and areas of knowledge that are beyond my current scope. Additionally, my training data only goes up to a certain point in time, so I may not have access to the most up-to-date information on certain topics.

Furthermore, there are some types of knowledge that are difficult for AI language models to acquire, such as common sense, intuition, and creativity. While I can provide factual information and answer questions based on what I have been trained on, I may not always be able to provide insights or solutions that require these more complex types of knowledge.

Question: what are the downsides of using chat gpt

Answer: As an AI language model, ChatGPT has several limitations and potential downsides that you should be aware of:

- Lack of common sense and context: While ChatGPT is trained on vast amounts of text data, it may not have the same level of common sense and contextual understanding as a human. This can lead to misunderstandings and errors in communication.

- Biases in training data: ChatGPT is trained on text data from the internet, which can contain biases and prejudices. These biases can influence the responses generated by ChatGPT.

- Limited understanding of emotions: ChatGPT may not be able to understand the emotional context of a conversation, such as sarcasm, humor, or irony, which can lead to inappropriate or insensitive responses.

- Lack of personalization: ChatGPT is a general-purpose language model and does not have the ability to personalize its responses to individual users.

- Security and privacy concerns: ChatGPT may record and store conversations, which can raise concerns about data privacy and security.

- Technical issues: Like any technology, ChatGPT may experience technical issues such as slow response times, system failures, or bugs.

Overall, while ChatGPT can be a helpful tool for generating responses to text-based queries, it is important to recognize its limitations and potential downsides.

Question: is chat gpt ready for widespread corporate use?

Answer: ChatGPT can be used for certain corporate applications, such as customer support chatbots, but its readiness for widespread corporate use depends on several factors.

Firstly, the specific use case needs to be considered. ChatGPT is best suited for applications that involve generating natural language responses to text-based queries or conversations. For example, it could be used to generate responses to frequently asked questions or to provide basic customer support.

Secondly, the level of customization and personalization required for the use case needs to be considered. ChatGPT is a general-purpose language model and may not be able to provide highly personalized responses that are specific to individual users or businesses. In some cases, a custom language model may be required to achieve this level of personalization.

Thirdly, security and privacy concerns need to be addressed. Corporate use of ChatGPT may involve sensitive data or conversations, so appropriate security measures need to be in place to ensure data privacy and prevent data breaches.

Finally, technical infrastructure needs to be considered, including the capacity to handle large volumes of data and requests, as well as the ability to integrate with existing systems and workflows.Overall, while ChatGPT can be used for certain corporate applications, its readiness for widespread corporate use depends on the specific use case, level of personalization required, security and privacy concerns, and technical infrastructure.

Question: Does chat gpt contain data past 2021?

Answer: As a language model, ChatGPT is constantly being trained on new and updated data to improve its performance. However, the specific version of ChatGPT that I am based on has a knowledge cutoff of 2021, meaning that it was trained on text data up until that point in time.

Since my training data cutoff was in 2021, my responses may not reflect any events or developments that have occurred since that time. However, new versions of ChatGPT are constantly being developed and trained on more recent data, so it is possible that there are newer versions of ChatGPT that have access to more up-to-date information.

Frequently Asked Questions

How does ChatGPT generate responses?

ChatGPT generates responses by analyzing the input question or statement and using a language model to generate a response based on patterns and similarities found in the data it was trained on.

How does ChatGPT learn?

ChatGPT learns through a process called unsupervised learning, where it is trained on a large corpus of text and uses statistical algorithms to identify patterns and relationships between words and phrases.

Can ChatGPT understand emotions and sarcasm?

While ChatGPT can generate responses that appear to understand emotions and sarcasm, it does not actually understand emotions or sarcasm in the way humans do. It relies on patterns in the language to generate responses.

How does ChatGPT handle sensitive topics?

ChatGPT can generate responses on sensitive topics, but it is important to keep in mind that its responses are generated based on patterns found in the data it was trained on and may not always be sensitive or appropriate.

Can ChatGPT recognize personal information?

ChatGPT is not designed to recognize or retain personal information. However, it is important to be mindful of the information shared during conversations with ChatGPT.

Conversion Rate Optimization

From conversion analysis to A/B testing, we figure out why your site is not converting and create A/B tests that improve sales conversions.

Stop making random site changes without understanding if they are helping or hurting conversions.

True AI For eCommerce

Engage Shoppers.

Gain Insights.

Grow Sales.

Ochatbot is an eCommerce Chatbot, Business LeadBot, and Customer Service Chatbot

- 6 Compelling Heatmap Case Studies That Boosted Conversions - September 3, 2024

- Trending CRO Tests to Watch in 2024 - August 26, 2024

- 9 Ways Chatbots Can Skyrocket eCommerce Conversion Rates - July 5, 2024